User Testing & Research

After kickoff meetings with our client, and gathering more information about the users and faculty that typically design and teach courses online, my team and I conducted active-intervention think-aloud protocols with a number of faculty who expressed having had previous experience teaching courses online. Additionally, we devised and tested scenarios for use during the think-aloud sessions, which were developed based on the data we gathered from our clients, and which were meant to simulate a typical interaction faculty might have using each system. We used Techsmith Morae to both collect data and capture our participants' screens, and each of us alternated both running and facilitating the test and coding the data and taking notes, so that we all had experience running the various parts of a study.

Data-Driven Analysis

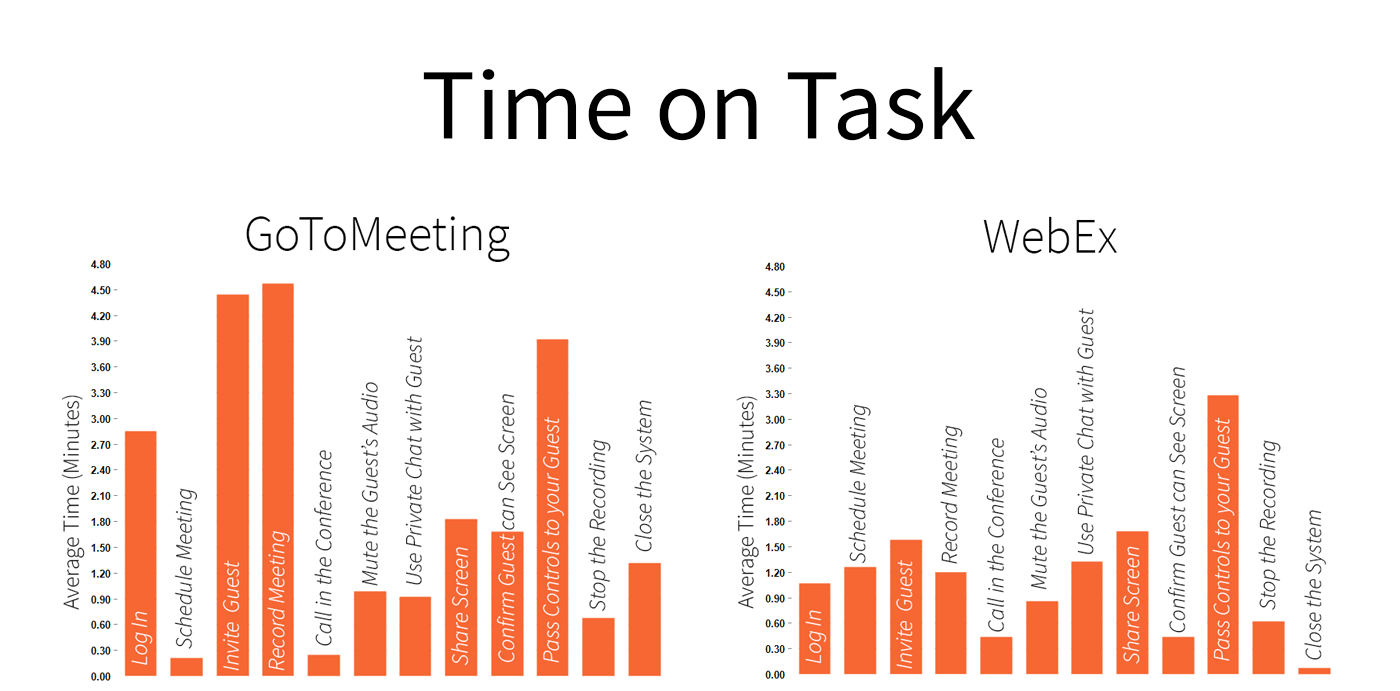

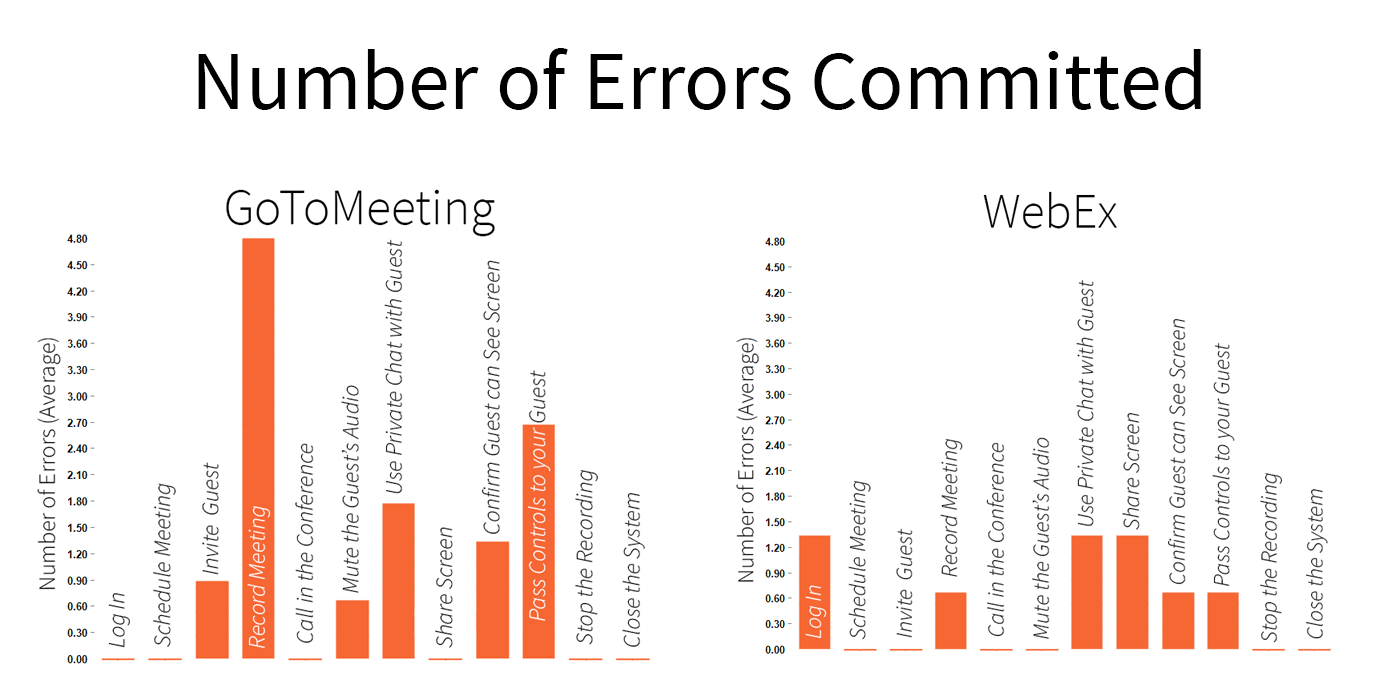

Using Morae, we were able to compile graphs and metrics for average time on task and error rate for both systems, as a more effective way of visualizing and presenting our results. In total, we tested five participants, each Clemson faculty with varying levels of experience both teaching courses online and with each system, along twelve tasks. The scenarios we tested specifically addressed the functionality that we had identified as the most critical for faculty and staff from our interviews, including: document and screen sharing capabilities, audio and video recording capabilities, the ability to use and interact with whiteboards, and the ability to divide classes into managed breakout groups. For a full set of the tasks we used, and corresponding results and findings from our study, you can see the video below, or access the final recommendation report we delivered to our clients here.

Delivering a Recommendation

After analyzing and coding the data, we decided to create a video presentation to deliver to our clients, as a self-contained recap detailing our methodology, findings and the recommendation we ultimately reached. We found that among Clemson faculty, WebEx was the preferred system, as it offered similar functionality as GoToMeeting, but with marginally better ease-of-use and user preference. As detailed in the video (07:25), users had a hard time setting up the meeting space and inviting guests in GoToMeeting, and were tripped up by its corporate language and jargon ("presenter," "meeting"). Our findings and recommendations later aided Vice-Provost Salley and Clemson Online in making a multi-million dollar decision on which system to adopt. The video we presented to our clients can be seen below. The formal recommendation report we delivered can be acessed here.